Normalization or Standardization: Which Method to Choose for Your Dataset?

Scaling your dataset to the same range is an essential step for effective model training and for applying methods like Principal Component Analysis (PCA). But which method should you use—normalization or standardization? In this section, we will explore which technique is best suited for different situations.

By the end of this article, you will be able to understand the utility of normalization and standardization, use each method of scaling effectively and correctly, and boost your model's performance or feature selection methods.

Why Scaling ?

Scaling is a method that increases the intervals of values of columns or variables to one unified interval. Now, you may be wondering why this is important. When training data or performing feature selection, such as Principal Component Analysis (PCA), if the values in the variables are on different scales, it can lead to bias. The model may perceive columns with larger values as more important and neglect those with smaller values. This discrepancy can cause serious issues in the authenticity, fidelity, and accuracy of the model and feature selection. So, what can we do to handle this issue? The answer lies in scaling. Normalization and standardization are two scaling methods that adjust the intervals of all variables to a common range. By doing so, we ensure that each variable contributes equally to the model, thus enhancing its performance and reliability.

Normalisation

Normalization is a broad concept that encompasses various methods for adjusting data values measured on different scales to a common scale. This process is essential for ensuring that comparisons and analyses are valid. To illustrate the different types of normalization, consider the following points:

- Scale of Data: This can involve transforming data to a range of 0 to 1 or -1 to 1. However, it's important to note that this method can be sensitive to outliers, which may distort the distances between variables.

- Outliers: When outliers are present, they can significantly affect the results of normalization, leading to misleading interpretations.

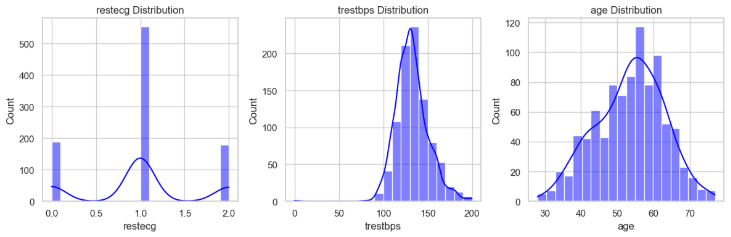

- Distribution Considerations: Normalization techniques may be particularly useful when the data does not follow a clear Gaussian distribution and the overall distribution of the data is unknown.

Normalization methods:

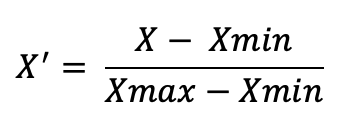

- Min-Max Normalization: Rescales exam scores to a range of 0 to 1, where the lowest score is 0 and the highest score is 1.

- Log Normalization: Applies a logarithmic transformation to salaries, reducing the influence of extremely high salaries on the overall analysis.

- Decimal Scaling: Adjusts product prices by shifting the decimal point, making values smaller while maintaining their relative differences.

- Mean Normalization: Centers product ratings by subtracting the average rating, making it easier to see how each rating compares to the average.

Standardization

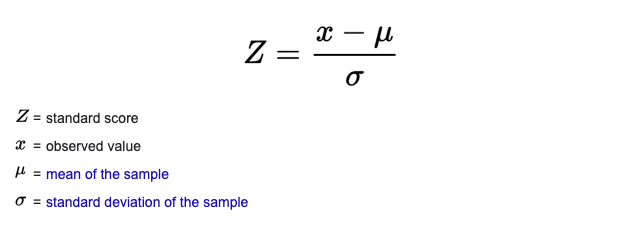

Standardization, also known as z-score scaling, transforms data to have a mean of 0 and a standard deviation of 1. While normalization adjusts data to a specific range, standardization centers the data by subtracting the mean and scales it by dividing by the standard deviation. This means that the transformed data is centered around zero and has a consistent spread defined by the standard deviation. Standardization is typically applied when the distribution is Gaussian and is less sensitive to outliers compared to normalization. However, it can alter the relationships between variables because of the centering and scaling processes.

What you should consider before using standarization:

- Gradient-based Algorithms: Standardization is essential for algorithms like Support Vector Machine (SVM) to achieve optimal performance. While models such as linear regression and logistic regression do not strictly require standardization, they can benefit from it, especially when feature magnitudes vary significantly. This helps ensure balanced contributions from each feature and enhances optimization.

- Dimensionality Reduction: Standardization is important in dimensionality reduction techniques like Principal Component Analysis (PCA), as PCA identifies the direction of maximum variance in the data. Mean normalization alone is inadequate since PCA takes into account both the mean and variance, and differing feature scales can distort the analysis.

- Data Distribution: Standardization is typically used when the data follows a Gaussian distribution. It is sensitive to the scale of the data and is less affected by outliers compared to normalization. However, it may change the relationships between variables due to the processes of centering and scaling.

Normalization vs. Standardization: Key Differences

| Aspect | Standardization | Normalization |

|---|---|---|

| Definition | Transforms data to have a mean of 0 and a standard deviation of 1. | Scales features to a specific range, typically [0, 1]. |

| Formula | (X - mean) / standard deviation | (X - min) / (max - min) |

| Use Cases | Used when data follows a Gaussian distribution. | Used when data does not follow a Gaussian distribution. |

| Outlier Sensitivity | Less sensitive to outliers compared to normalization. | More sensitive to outliers, which can distort the scaling. |

| Effect on Relationships | May change the relationships between variables. | Preserves the relationships between variables more effectively. |

Conclusion

In the world of data preprocessing, both standardization and normalization play vital roles in preparing your data for analysis. Think of standardization as giving your data a cozy little home where everything has a mean of 0 and a standard deviation of 1, while normalization is like ensuring your data fits perfectly into a neat box, usually between 0 and 1.

Whether you’re scaling your features or centering them, each method has its unique charm and usefulness. So, the next time you're faced with a dataset, you’ll know exactly which tool to pull out of your toolbox!

Stay tuned for our next article, where we’ll dive deeper into the enchanting world of feature engineering. We promise it’ll be a thrilling adventure full of insights and fun!